前言

本文中,对于 Elasticsearch、kibana、Elasticsearch-head的基本使用,做一个演示

参考文献

ES官方文档:https://www.elastic.co/guide/cn/elasticsearch/guide/current/index-doc.html

| DB | Elasticsearch |

|---|---|

| 数据库(database) | 索引(indices) |

| 表(tables) | 类型(types) |

| 行(rows) | 文档(documents) |

| 字段(columns) | 字段(fields) |

CURD预览

| 示例名称 | 请求类型 | 路由 |

|---|---|---|

| 新建索引 | PUT | /{index}/{type}/{id} |

| 查询索引 | GET | /{index}/{type}/{id} |

| 更新索引 | POST | /{index}/{type}/{id} |

| 删除索引 | DELETE | /{index}/{type}/{id} |

路由

一个文档的 _index 、 _type 和 _id 唯一标识一个文档。 我们可以提供自定义的 _id 值,或者让 index API 自动生成。举个例子,如果我们的索引称为 website ,类型称为 blog ,并且选择 123 作为 ID ,那么索引请求应该是下面这样:

PUT /website/blog/123

{

"title": "My first blog entry",

"text": "Just trying this out...",

"date": "2014/01/01"

}kibana使用

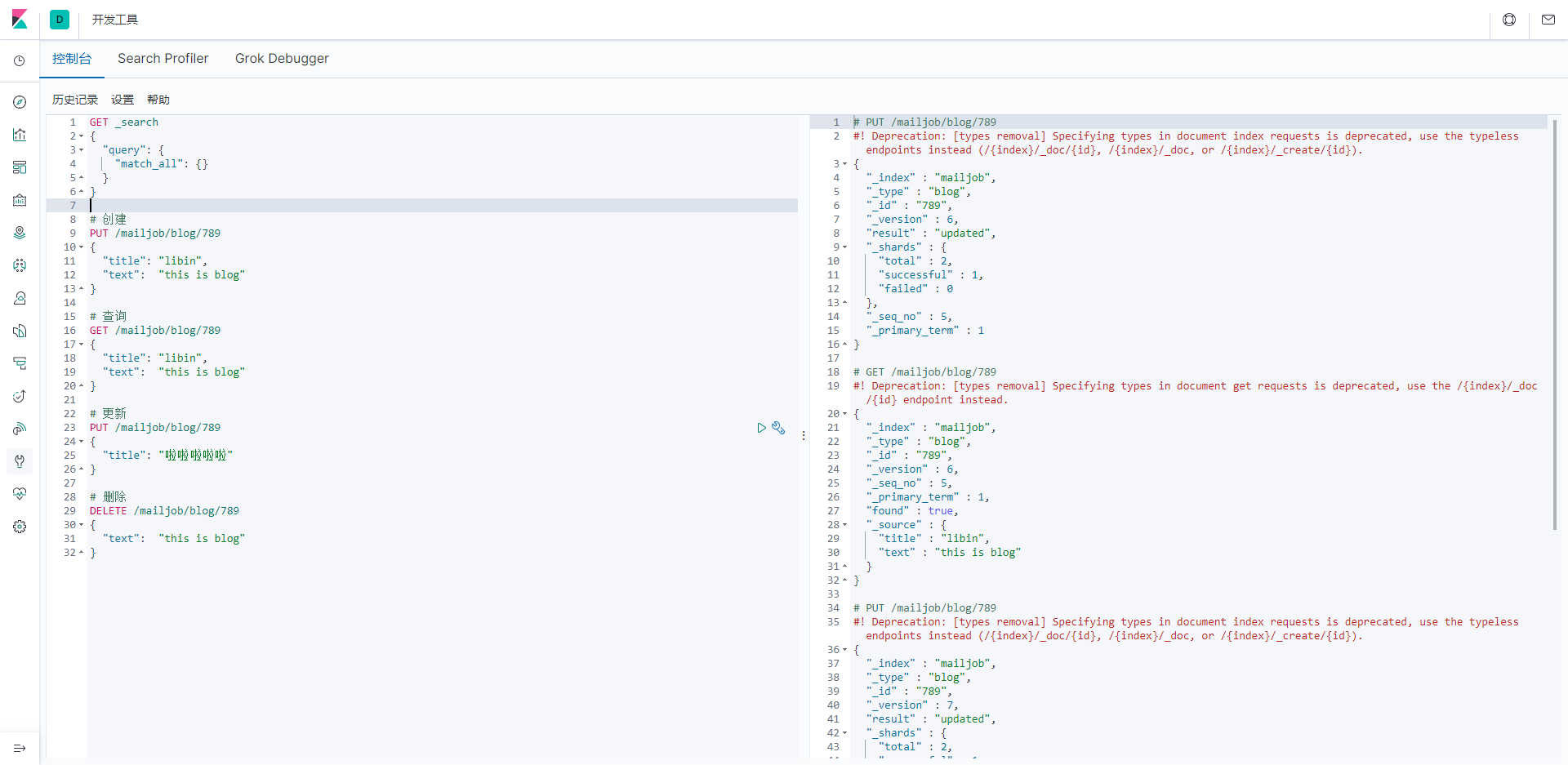

# 创建

PUT /mailjob/blog/789

{

"title": "libin",

"text": "this is blog"

}

# 查询

GET /mailjob/blog/789

{

"title": "libin",

"text": "this is blog"

}

# 更新

PUT /mailjob/blog/789

{

"title": "啦啦啦啦啦"

}

# 删除

DELETE /mailjob/blog/789

{

"text": "this is blog"

}

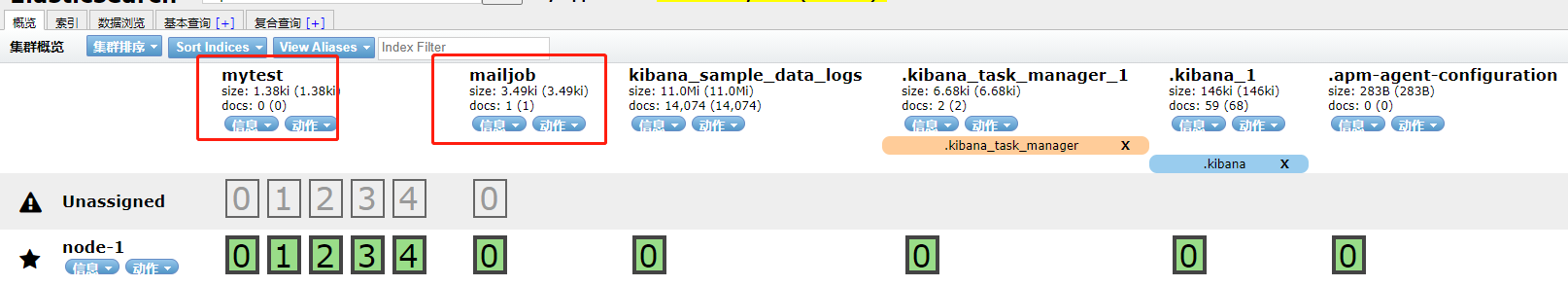

Elasticsearch-head使用

Elasticsearch在linux使用

查询

[root@VM-0-15-centos home]# curl -X GET 'http://127.0.0.1:9200/mailjob/blog/789'

{"_index":"mailjob","_type":"blog","_id":"789","_version":1,"_seq_no":8,"_primary_term":1,"found":true,"_source":{

"title": "libin",

"text": "this is blog"

}更新

[root@VM-0-15-centos home]# curl -H 'Content-Type: application/json' -X POST 'http://127.0.0.1:9200/mailjob/blog/789' -d'{"title": "libin"}'

{"_index":"mailjob","_type":"blog","_id":"789","_version":2,"result":"updated","_shards":{"total":2,"successful":1,"failed":0},"_seq_no":9,"_primary_term":1}ik分词器测试

IK提供了两个分词算法:ik_smart 和 ik_max_word,其中 ik_smart 为最少切分,ik_max_word为最细 粒度划分

GET _analyze

{

"analyzer" : "standard",

"text" : "es插件来了"

}

{

"tokens" : [

{

"token" : "es",

"start_offset" : 0,

"end_offset" : 2,

"type" : "<ALPHANUM>",

"position" : 0

},

{

"token" : "插",

"start_offset" : 2,

"end_offset" : 3,

"type" : "<IDEOGRAPHIC>",

"position" : 1

},

{

"token" : "件",

"start_offset" : 3,

"end_offset" : 4,

"type" : "<IDEOGRAPHIC>",

"position" : 2

},

{

"token" : "来",

"start_offset" : 4,

"end_offset" : 5,

"type" : "<IDEOGRAPHIC>",

"position" : 3

},

{

"token" : "了",

"start_offset" : 5,

"end_offset" : 6,

"type" : "<IDEOGRAPHIC>",

"position" : 4

}

]

}

GET _analyze

{

"analyzer" : "ik_smart",

"text" : "es插件来了"

}

{

"tokens" : [

{

"token" : "es",

"start_offset" : 0,

"end_offset" : 2,

"type" : "ENGLISH",

"position" : 0

},

{

"token" : "插件",

"start_offset" : 2,

"end_offset" : 4,

"type" : "CN_WORD",

"position" : 1

},

{

"token" : "来了",

"start_offset" : 4,

"end_offset" : 6,

"type" : "CN_WORD",

"position" : 2

}

]

}

GET _analyze

{

"analyzer" : "ik_max_word",

"text" : "es插件来了"

}

{

"tokens" : [

{

"token" : "es",

"start_offset" : 0,

"end_offset" : 2,

"type" : "ENGLISH",

"position" : 0

},

{

"token" : "插件",

"start_offset" : 2,

"end_offset" : 4,

"type" : "CN_WORD",

"position" : 1

},

{

"token" : "来了",

"start_offset" : 4,

"end_offset" : 6,

"type" : "CN_WORD",

"position" : 2

}

]

}